– Welcome to class

– Listening to YouTube from the terminal

– Summarising papers with @Notion

– Reading papers collaboratively

– Attention! Self / cross, hard / soft

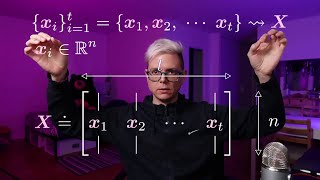

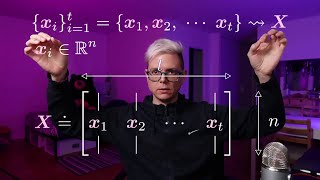

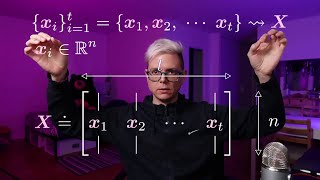

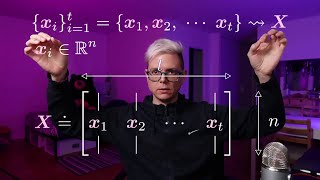

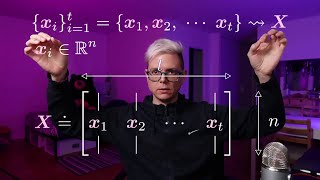

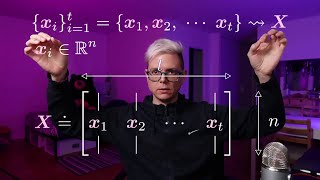

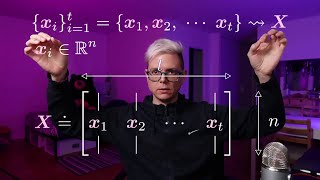

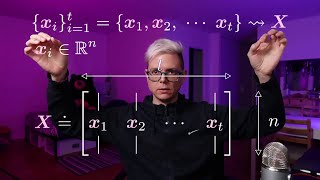

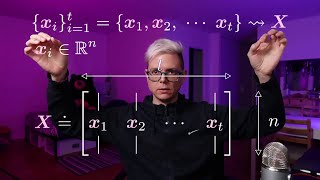

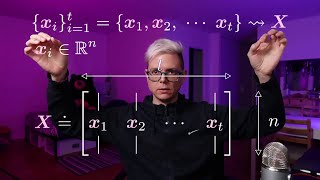

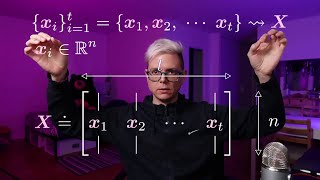

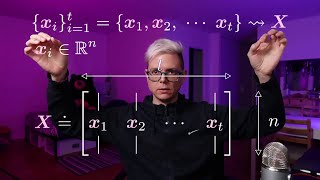

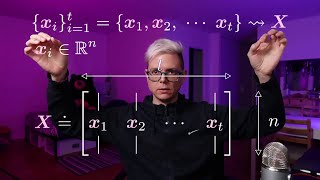

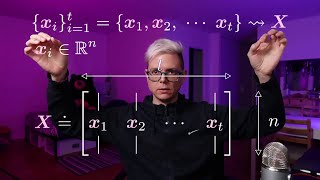

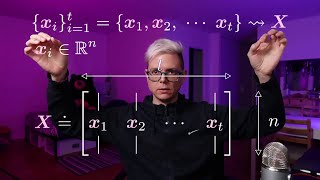

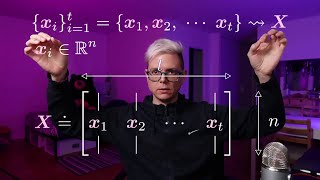

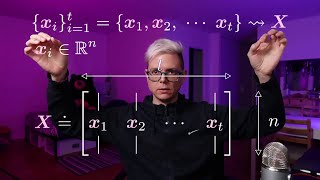

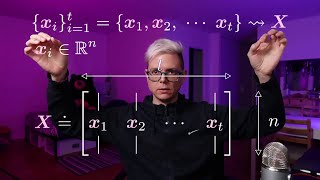

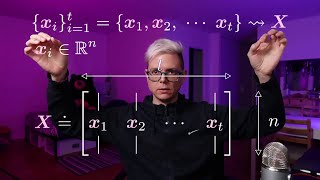

– Use cases: set encoding!

– Self-attention

Hello Alf, Everyone here prob know what you are trying to say, but I think the matrix transpose animation should be fixed.

– Key-value store

– Queries, keys, and values → self-attention

– Queries, keys, and values → cross-attention

– Implementation details

– The Transformer: an encoder-predictor-decoder architecture

– The Transformer encoder

– The Transformer “decoder” (which is an encoder-predictor-decoder module)

, the 'h' after the predictor. What is the truth of that h? (Hidden representation)

– Jupyter Notebook and PyTorch implementation of a Transformer encoder

Hi Alf, thanks for the great explanation !! A question, why do we set the bias=False, why do we not need an affine transformation of the input space, but just rotation? @